instruction fine-tuning

- step 1: pretraining – only focused on predicting the next token

- step 2: supervised fine-tuning (SFT) on various tasks – summarization, translation, sentiment analysis, text classification, …

- using cross-entropy loss

- step 3: instruction fine-tuning using RLHF (reinforcement learning with human feedback) – model provides several responses for a given prompt, human ranks them

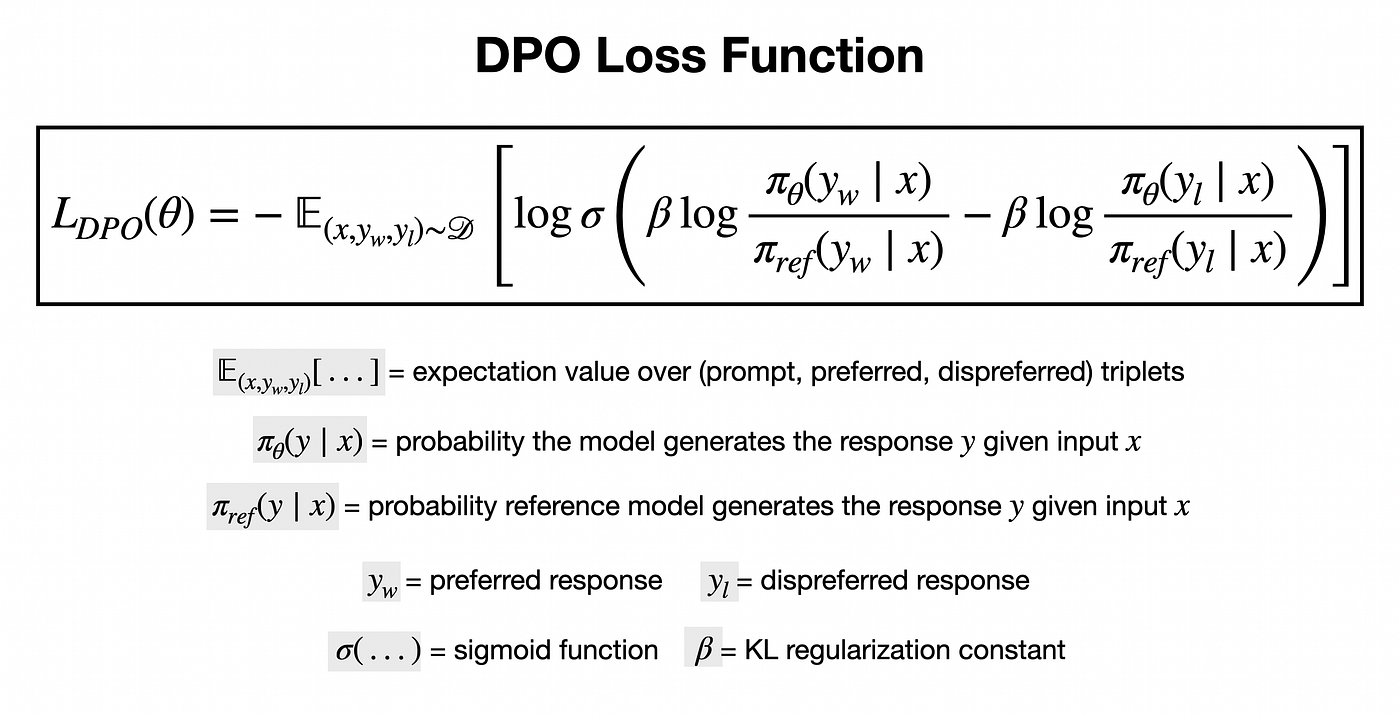

- direct preferred optimization (DPO)

- we use pairwise preferences

- … prompt

- … preferred output

- … less preferred output

- … sigmoid function

- … regularization parameter

- … probability distribution of our model with parameters

- … probability dist. of the reference model we get after SFT

- we want to maximize the first term and to minimize the second term

- we use pairwise preferences

- direct preferred optimization (DPO)